Build a Strong Foundation for Your Data-Driven Future

In an era where data drives every critical decision, the difference between success and stagnation lies in how well organizations leverage their information. Raw data is often messy, unstructured, and scattered across multiple sources, making it challenging to derive meaningful insights. This is where data engineering comes in—a critical discipline that focuses on designing, building, and maintaining the infrastructure needed to collect, store, process, and deliver data efficiently. Robust, scalable data pipelines serve as the essential backbone of data science, analytics, and machine learning, delivering pristine, precise, and real-time data where it’s needed most. At Qubitstats, we specialize in turning unstructured data into strategic intelligence by optimizing data flow and integrity, enabling businesses to enhance decision-making, streamline operations, and secure a competitive edge in an increasingly data-driven landscape.

This article explores the key aspects of data engineering, its importance, core components, tools, and best practices.

What is Data Engineering?

Data engineering is the practice of designing and constructing systems for data collection, storage, processing, and retrieval. It ensures that data is accessible, reliable, and optimized for analysis. Data engineers work closely with data scientists, analysts, and business stakeholders to create robust pipelines that transform raw data into usable formats.

Key Responsibilities of a Data Engineer:

- Data Ingestion – Collecting data from various sources (databases, APIs, logs, IoT devices).

- Data Storage – Structuring data in data warehouses, data lakes, or databases.

- Data Processing – Cleaning, transforming, and aggregating data for analysis.

- Data Pipeline Automation – Building workflows to move and process data efficiently.

- Scalability & Performance Optimization – Ensuring systems handle large-scale data efficiently.

- Data Governance & Security – Implementing policies for data quality, privacy, and compliance.

Why is Data Engineering Important?

- Enables Data Science & AI – Machine learning models require clean, structured data to function accurately.

- Improves Decision-Making – Reliable data pipelines ensure business leaders have timely, accurate insights.

- Supports Real-Time Analytics – Modern businesses need real-time data processing for instant decision-making.

- Reduces Data Silos – Proper engineering integrates disparate data sources into a unified system.

- Ensures Compliance – Proper data handling helps meet regulations like GDPR, HIPAA, and CCPA.

Core Components of Data Engineering

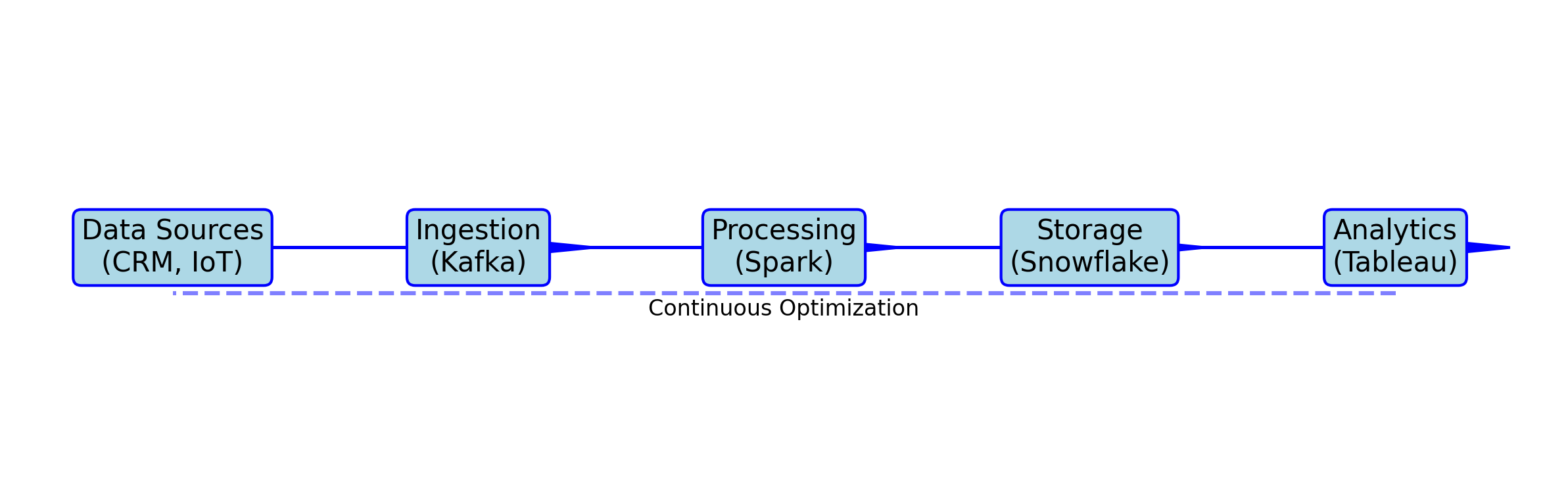

Data engineering involves building systems to collect, store, process, and analyze data. Its core components include:

- Data Ingestion: Collecting data from various sources (databases, APIs, streaming platforms) using tools like Apache Kafka or Airflow.

- Data Storage: Storing data in scalable systems like data lakes (e.g., AWS S3), data warehouses (e.g., Snowflake), or databases (e.g., PostgreSQL).

- Data Processing: Transforming raw data through ETL/ELT pipelines using frameworks like Apache Spark or dbt for cleaning, aggregation, and enrichment.

- Data Orchestration: Automating and scheduling workflows with tools like Airflow or Prefect to ensure reliable pipeline execution.

- Data Quality: Ensuring accuracy and consistency through validation, monitoring, and testing with tools like Great Expectations.

- Data Governance: Managing access, security, and compliance using metadata catalogs (e.g., Apache Atlas) and policies.

- Infrastructure: Leveraging cloud platforms (AWS, GCP, Azure) or containerization (Docker, Kubernetes) for scalable, resilient systems.

Popular Data Engineering Tools & Technologies

| Category | Tools & Technologies |

| Databases | PostgreSQL, MySQL, Cassandra, MongoDB |

| Big Data | Hadoop, Spark, Hive, Presto |

| Data Warehousing | Snowflake, BigQuery, Redshift |

| Streaming | Kafka, Flink, Spark Streaming |

| Orchestration | Airflow, Dagster, Prefect |

| Cloud Platforms | AWS (Glue, EMR), GCP (Dataflow), Azure (Synapse) |

The Challenges Businesses Face Without Robust Data Engineering

- Fragmented Data Sources and Poor Quality: Disparate systems—CRMs, ERPs, IoT devices, and more—generate siloed data, riddled with inconsistencies, duplicates, and errors.

- Slow or Unreliable Pipelines: Legacy systems or ad-hoc processes lead to delays, leaving teams waiting hours (or days) for usable data.

- Scaling Struggles: As data volumes grow exponentially, infrastructure buckles, unable to handle increased demand.

- Lack of Real-Time Access: Delayed data means missed opportunities, especially in industries where timing is critical (e.g., e-commerce, finance).

The Opportunity: Unlock Your Data’s Full Potential

Data engineering bridges these gaps, offering:

- Seamless Data Flow: Unified pipelines that connect sources and deliver consistent data.

- Accuracy and Trust: Cleansed, validated datasets you can rely on.

- Advanced Analytics Enablement: The foundation for machine learning, predictive modeling, and real-time dashboards.

- Scalability: Infrastructure that evolves with your business, from gigabytes to petabytes.

From a data science perspective, robust pipelines ensure that analysts and data scientists spend their time uncovering insights—not wrestling with broken processes. This translates to faster time-to-value and a higher ROI on your data investments.

How We Help: Data Engineering That Drives Results

At Qubitstats, our data engineering services are designed to solve these challenges and position your business for success. We combine technical expertise, cutting-edge tools, and a client-centric approach to deliver pipelines that are reliable, efficient, and future-ready.

Our Proven Five-Step Approach

- Assessment & Planning

- We start with a deep dive into your current data ecosystem—evaluating sources, workflows, and pain points.

- Using diagnostic tools and stakeholder interviews, we pinpoint inefficiencies and map out a tailored roadmap.

- Key Deliverable: A comprehensive assessment report with actionable recommendations.

- Pipeline Design & Development

- We architect custom pipelines to ingest, process, and store data efficiently.

- Leveraging tools like Apache Airflow for orchestration and dbt for transformation, we ensure modularity and performance.

- Focus: Optimizing for your specific use cases, whether batch processing or streaming data.

- Integration & Automation

- We connect pipelines to your existing systems (e.g., Salesforce, Google Analytics) and automate workflows.

- Result: Reduced manual effort, fewer errors, and a unified data ecosystem.

- Quality Assurance

- We implement rigorous validation checks (e.g., schema enforcement, anomaly detection) and cleansing routines.

- Outcome: High-quality data that fuels trustworthy analytics and decision-making.

- Scalability & Optimization

- Built on cloud platforms like AWS, Azure, or GCP, our solutions scale effortlessly with your growth.

- We fine-tune performance—reducing latency and costs—using techniques like partitioning and indexing.

Tools We Use

- Ingestion: Apache Kafka, Fivetran

- Processing: Apache Spark, Databricks

- Storage: Snowflake, BigQuery, Redshift

- Orchestration: Airflow, Prefect

- Monitoring: Prometheus, Grafana

This data science-driven toolkit ensures your infrastructure is not just functional but optimized for analytics workloads.

Real-World Example: Transforming a SaaS Business with Data Engineering

Client Profile

- Industry: Software-as-a-Service (SaaS)

- Size: Mid-sized, 200 employees, 50,000+ users

- Goal: Enhance customer retention through real-time insights

The Challenge

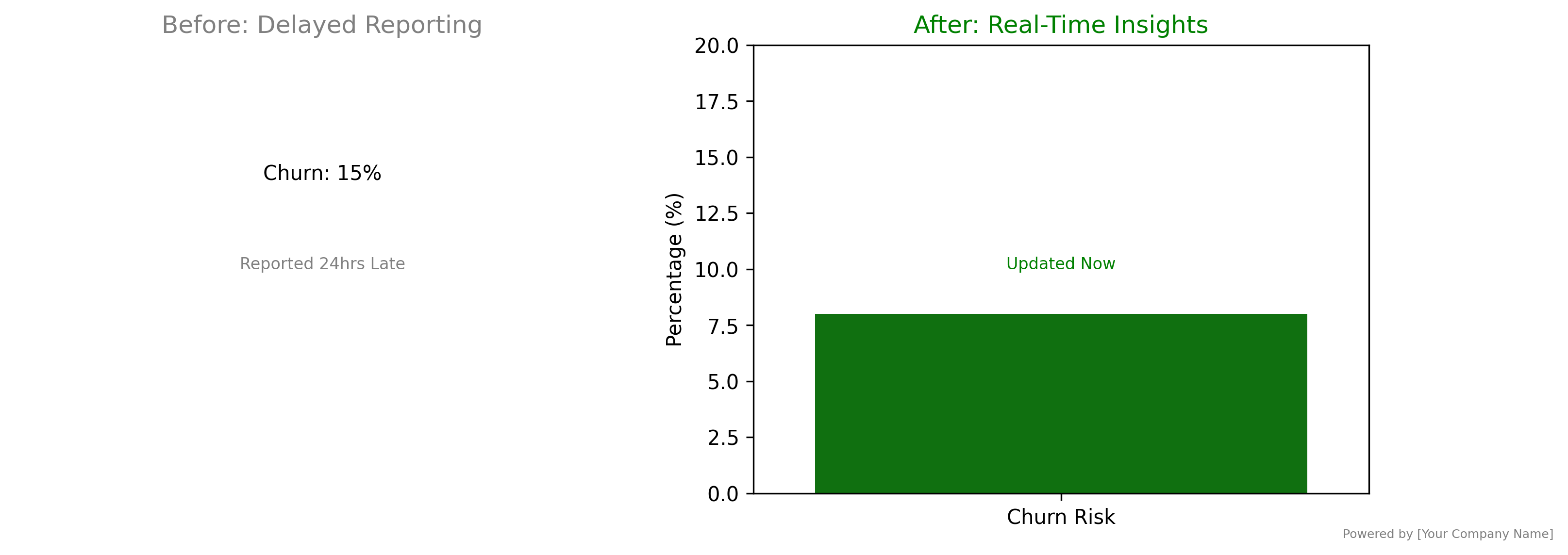

- Outdated Pipelines: Legacy ETL processes took 12+ hours to process daily user activity data, delaying reporting.

- Data Silos: Customer data was split across PostgreSQL databases, third-party APIs, and log files, leading to incomplete insights.

- Retention Risk: Without real-time analytics, the client couldn’t proactively address churn, losing 15% of customers annually.

Our Solution

- Modernized Architecture: Replaced batch ETL with a streaming pipeline using Apache Kafka for real-time data ingestion and Snowflake for storage.

- Unified Data Model: Integrated siloed sources into a single schema, enriched with behavioral data (e.g., feature usage).

- Automation & Monitoring: Deployed Airflow to automate workflows and set up alerts for pipeline failures.

- Analytics Enablement: Built a real-time dashboard in Tableau, powered by cleansed, aggregated data.

Results

- 40% Faster Processing: Reduced pipeline runtime from 12 hours to 4.8 hours, with streaming data available in seconds.

- 10% Retention Boost: Real-time churn predictions enabled proactive outreach, saving $1.2M in annual revenue.

- Improved Trust: Data quality issues dropped by 85%, empowering confident decision-making.

- Scalability Achieved: The system now handles 3x the previous data volume without performance degradation.

Analytics Perspective

From a data science lens, this transformation unlocked predictive modeling (e.g., churn propensity scores) and segmentation analysis, directly tying engineering efforts to business outcomes. The client’s data team shifted from firefighting to innovation, a hallmark of effective data engineering.

Key Benefits Clients Care About

Our clients—ranging from e-commerce platforms to financial firms—seek measurable value. Here’s what our data engineering delivers:

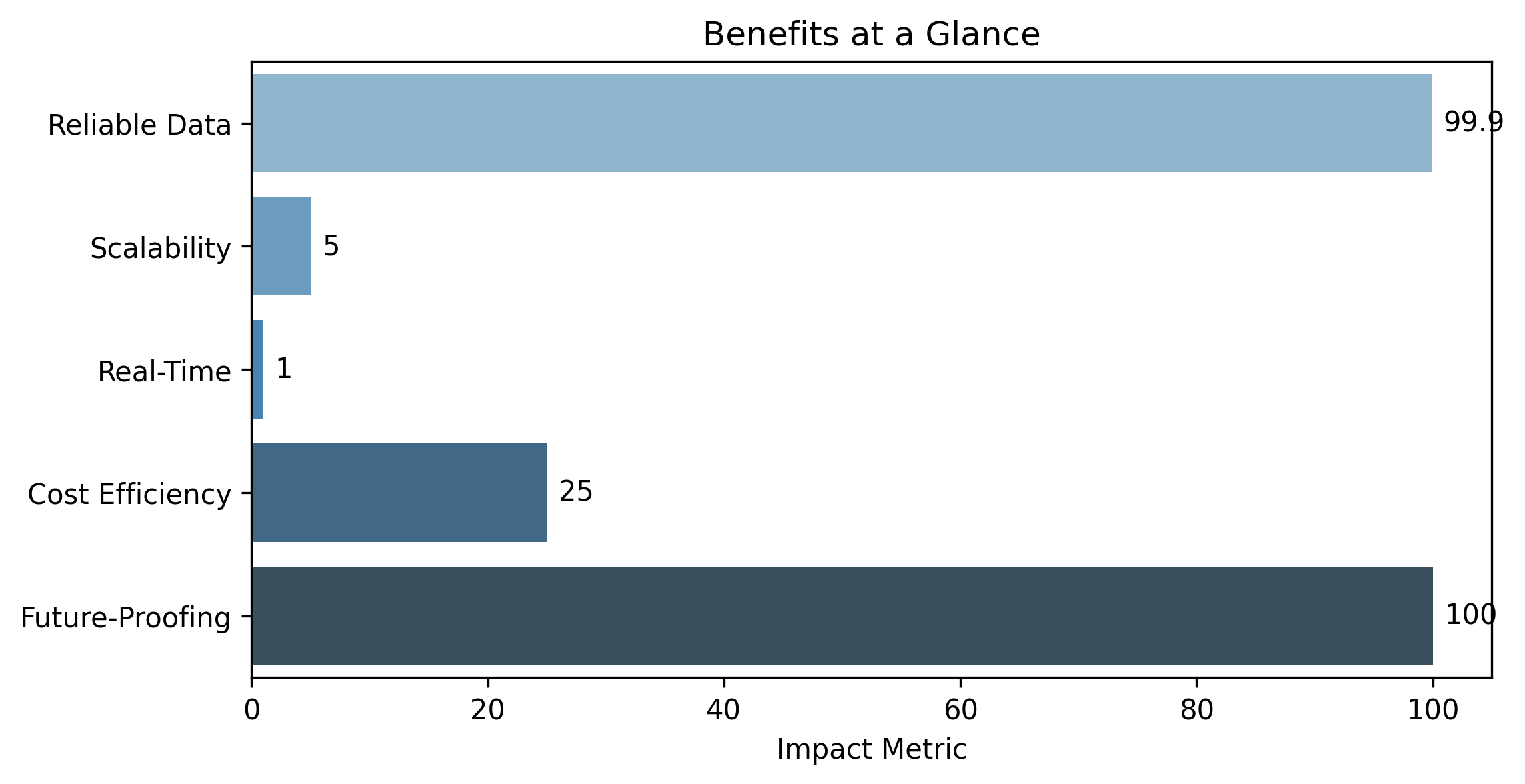

- Reliable Data Flow

- Consistent, error-free data across systems, eliminating “garbage in, garbage out” scenarios.

- Example Metric: 99.9% pipeline uptime.

- Scalability Without Headaches

- Infrastructure that grows from terabytes to petabytes without re-engineering.

- Example: A retail client scaled holiday traffic 5x with zero downtime.

- Real-Time Insights

- Sub-second data availability for dashboards, alerts, and models.

- Use Case: Fraud detection in fintech, catching anomalies instantly.

- Cost Efficiency

- Automation reduces labor costs; optimization lowers cloud spend.

- Example: 25% reduction in AWS bills through resource tuning.

- Future-Proofing

- A foundation for AI, machine learning, and advanced analytics (e.g., NLP, time-series forecasting).

- Example: A healthcare client launched a predictive maintenance model post-engagement.

Why Partner With Us?

- Expertise: Our team of data engineers and scientists averages 10+ years of experience, with certifications in AWS, GCP, and Databricks.

- Tailored Solutions: No cookie-cutter fixes—your business goals drive our designs.

- Cutting-Edge Stack: We stay ahead of the curve, adopting tools that maximize ROI.

- Proven Track Record: 50+ clients, $50M+ in value created through faster insights and efficiency gains.

Take the Next Step: Your Data Infrastructure Assessment

Ready to turn your data into a competitive edge? Contact us for a complimentary Data Infrastructure Assessment. We’ll analyze your current setup, identify bottlenecks, and deliver a roadmap to optimize your workflows. Whether you’re starting from scratch or refining an existing system, we’ll show you how data engineering can accelerate your goals.

Lay the Groundwork for a Data-Driven Future

Data engineering isn’t just plumbing—it’s the strategic enabler of your analytics, AI, and business intelligence initiatives. With Qubitstats, you’re not just building pipelines; you’re building a foundation for growth, innovation, and resilience. Let’s partner to transform your data into your greatest asset.

Data Infrastructure Assessment

Contact us for a complimentary assessment to identify how data engineering can optimize your data workflows and support your goals.